AI thrives on data. Without large, diverse, and representative datasets, even the most sophisticated models will fall short. But in many sensitive areas—like detecting harmful or abusive content—collecting real-world data is extremely difficult. Privacy concerns, ethical constraints, and the emotional toll on annotators all mean researchers often work with small or outdated datasets.

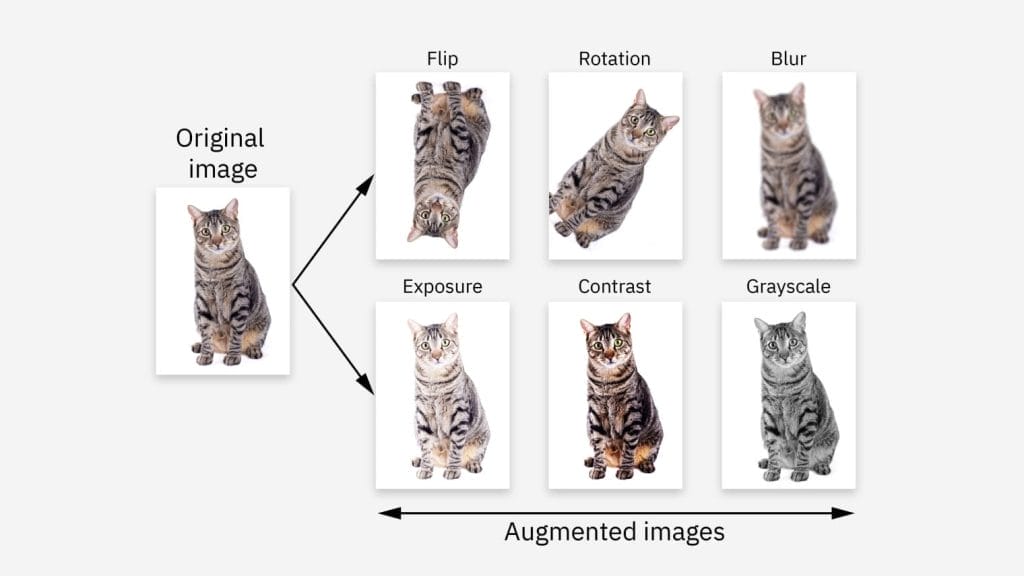

To bridge the gap, the field has embraced data augmentation. This means creating new training examples from existing ones, or generating fresh samples using large language models (LLMs) such as GPT-4. In theory, augmentation is the perfect solution: it scales quickly, protects people from exposure to harmful content, and allows researchers to generate almost limitless data.

But as we’ve learned—and as research confirms—synthetic data is not a cure-all. It helps fill gaps, but it can’t fully replace the messiness, nuance, and unpredictability of the real world.

What Research Shows about Data Augmentation

Kazemi et al. (2025) found that combining small amounts of real data with larger pools of LLM-generated data slightly improved performance in harmful content detection. Synthetic examples can stretch limited resources and boost results when used carefully.

But quality depends on generation. Poor prompts produce unrealistic outputs, and even advanced LLMs often filter out toxic or extreme content. Kumar et al. (2024) had to bypass safety filters (“jailbreak” models) just to get authentic bullying language—showing how difficult it is to capture harsher, real-world patterns.

Another key limitation: most datasets are static snapshots. They don’t capture how language evolves over time—new slang, memes, or platform-specific behaviors—which leaves models less prepared for emerging trends.

Why Synthetic Data Falls Short

AI-generated text is often too clean, too polished, and too generic. It misses the slang, in-jokes, emoji, and shifting cultural references that real users employ every day. Content filters make the problem worse, since they prevent the generation of the most severe or explicit cases, leaving synthetic datasets biased toward mild examples.

This isn’t unique to language. In self-driving research, cars train on endless simulated scenarios but still fail in unfamiliar real-world edge cases. And in AI research more broadly, there’s the risk of model collapse: when models are trained repeatedly on synthetic data, they can drift further from reality as errors and biases accumulate.

Why Real Data Still Matters

Even small sets of authentic examples provide critical value:

- Anchor models to reality instead of artificial patterns.

- Capture change as slang, memes, and abuse styles evolve.

- Include outliers and rare cases that synthetic data tends to miss.

- Build trust with stakeholders who want assurance the model works in real conditions.

Without these grounding points, models risk learning only approximations of human behavior—useful in theory, but fragile in practice.

The Balance

Synthetic data is powerful for scaling quickly and reducing exposure to harmful content. But it can’t replace the messiness of real-world communication. The best approach is balance: use augmentation for breadth, and ground every system with a core of high-quality real examples collected over time.

Our Dataset

In our own cyberbullying detection work, we’ve adopted this principle. Synthetic examples help us cover a wide range of insults, neutral statements, and ambiguous edge cases. At the same time, we deliberately incorporate carefully collected real-world cases—especially those gathered across different years and platforms. These authentic examples serve as anchors, letting us see how online language evolves and making sure our models don’t drift into learning only sanitized or artificial patterns.

References

- Ataman, A. (2025). Synthetic Data vs Real Data: Benefits, Challenges in 2025. AIMultiple.

- Kazemi, A., et al. (2025). Synthetic vs. Gold: The Role of LLM-Generated Labels and Data in Cyberbullying Detection. arXiv preprint arXiv:2502.15860.

- Kumar, Y., et al. (2024). Bias and Cyberbullying Detection and Data Generation Using Transformer AI Models and Top LLMs. Electronics, 13(17), 3431.

- Myers, A. (2024). AI steps into the looking glass with synthetic data. Stanford Medicine.

Leave a Reply